DataBus - Vol 41 No. 3: April-May, 2001

So you want to build a Storage Area Network (SAN). There's nothing to it, right? Isn't everyone building a SAN? Why not you? After all, you want your facility to be seen as a leading-edge data center and you're probably thinking that you can't do it without a SAN. Before you step into the "SAN box," there are a few issues you'll need to consider. You'll need to know what a SAN really is and what it isn't. You'll also need to know the difference between SAN and NAS or Network Attached Storage. And finally, do you have a handle on where networked storage technology is headed in the future? Part I should help you become familiar with networked storage and allow you to set appropriate expectations on the networked storage solutions available; NAS and SANs in particular. It should also help you set some realistic goals in terms of deploying storage in networked environments.

Networked Storage 101

There are two popular forms of networked storage today: Network Attached Storage or NAS and Storage Area Networks or SAN. Both NAS and SAN provide the ability for multiple servers or clients to share storage resources, but they differ greatly in their implementation. The following diagram shows how NAS and SAN can be differentiated based on connectivity:  In client-server computing, multiple clients (PCs or workstations) talk to a server over a local area network or LAN. A single server can service hundreds of clients depending on the configuration. Now, imagine those same clients seamlessly accessing storage resources without going through a traditional general-purpose file server. This is Network Attached Storage, or NAS. Next, picture the same principle, except that instead of multiple clients seamlessly accessing storage, you now have multiple servers accessing the storage resources. This is a Storage Area Network, or SAN.

NAS or SAN — What to Deploy and When

Analysts, editors, vendors, consultants, and customers throw around IT acronyms all the time, and NAS and SAN are no exceptions. NAS and SAN are in the news because end users are rapidly running out of disk space and looking to networked storage solutions to help solve the problems. IT administrators are spending too much time adding new disks to systems and too much time managing the increased storage resources. How do you cut through the hype and clearly position these two different ways of implementing networked storage in your environment? First, identify what kind of system is talking to the storage device. In NAS, clients are talking to or sharing the storage resources. Client communications are usually centered on the transfer or access of files: copying, printing or sharing files between the client and the server or other clients on the network. In a SAN, however, multiple servers are sharing direct access to the storage resources. Communications between servers and storage is typically at a much lower level than communications to and from clients. Servers typically deal with information on a block level. Given this, one way to determine if it's NAS or SAN is to understand what is being communicated or shared within the storage device. If it's file-based communication, then NAS should be the first choice. If it's block level communications, such as data base manipulation or accessing a data warehouse, then SAN is most likely the proper choice. Another way to look at the difference between NAS and SAN is to determine where the data needs to be accessed. If the data is to be accessed by a specialized workgroup over an existing LAN, then the best solution is to locate the storage device within the workgroup (NAS). This will reduce the impact that the workgroup could have on the entire network if access is outside the workgroup environment. Likewise, if data needs to be accessed by many different users in multiple locations or via multiple different servers (i.e. email), then putting the storage near the servers or SAN, would be the best solution.

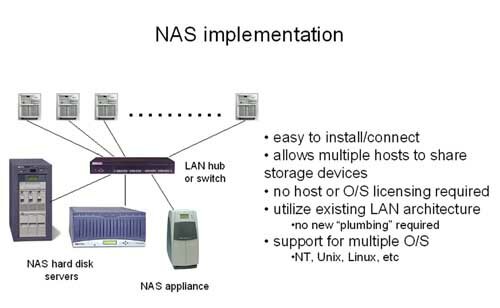

NAS — Easy Does It

One of the key attributes of NAS devices is that they install within the existing LAN infrastructure. This makes them very easy to install and configure. Typically, all that is required is a device name and an IP address. And if the NAS device supports DHCP, then only the name is required because the host automatically assigns the NAS device an IP address. To install and configure a NAS device, the user typically runs a web-based interface to set the device name and IP. Then, the NAS device is rebooted and is made available to all clients on the network. It usually takes between 10 and 15 minutes to install and configure a NAS device. NAS devices use special "thin servers" to allow them to connect to the network and provide access to storage resources. These thin-servers, suited for specific tasks, are slimmed-down versions of regular file servers, with specialized software to handle minimal network and storage tasks. On the network, NAS devices allow clients to access storage. The figure below shows how a NAS device would be connected in a typical workgroup configuration.  The downside in this simplistic approach is in managing NAS resources. Because they are specialized, they don't always integrate well with standard storage management applications. For example, backup applications typically require agents to be installed on servers in order to allow them to be backed up. Since NAS devices don't have a full operating system, it may not feasible to load the exact agent required. This means some NAS storage devices use proprietary backup methods or they must be backed up over the network, increasing network traffic and loading. Also, because they have these special operating systems, NAS devices don't always integrate into the network management suite used by your customer. The best NAS devices will typically integrate into system management software like OpenView or Unicenter. Devices will be mapped in the system management view and the administrator can launch the NAS management tools directly from within these applications. Some NAS devices only send SNMP messages to these system management applications. These applications then decipher the message and display it to the administrator. Because of the wide variety of NAS solutions, not all messages can be deciphered simply, potentially complicating the network management task for the system administrator. Data security is another issue to be addressed when considering the deployment of NAS devices. Today, most NAS solutions integrate within the O/S security system and access to a NAS device can be controlled via the O/S security and permissions settings. This occurs on the Primary Domain Controller. However, if O/S security is breached, then so is the access to the NAS device. As noted, most NAS devices should be deployed in workgroup or departmental environments. Typically, most workgroups are set up based on the tasks that the clients perform and the client systems share files and applications that generate files in the workgroup. By using a NAS storage device such as a hard drive server, each client in the workgroup can store similar or shared information locally within the workgroup. The advantage here is that by limiting network traffic outside the workgroup, the bandwidth between workgroups can be freed up.  In the diagram above, all file transactions from workgroup A pass through the hub to the server and then to the disk drive. The same applies for workgroups B and C as well. In the lower right diagram, using NAS devices, file transactions that are specific to workgroup A never have to travel outside of the workgroup. The same applies to workgroup B. This means there is less traffic between workgroups than in the non-NAS configuration. Additionally, if the hub connecting the three workgroups to the server fails, workgroups A and B can still access data stored in the NAS device connected to their respective workgroup. In summary, NAS devices are ideal for workgroup and departmental environments, where file sharing or file-based transactions occur within individual groups, such as sharing CAD drawings or email client archive files. NAS devices can be used to isolate network transactions within a workgroup and can reduce the loading of the overall network bandwidth. Also, a NAS device can be placed throughout a network, enabling data storage to be done closer to client systems. Should a segment of the network fail, data within specific workgroups can still be processed, improving performance, reliability and availability.

SAN – The Fix-All for Storage Consolidation

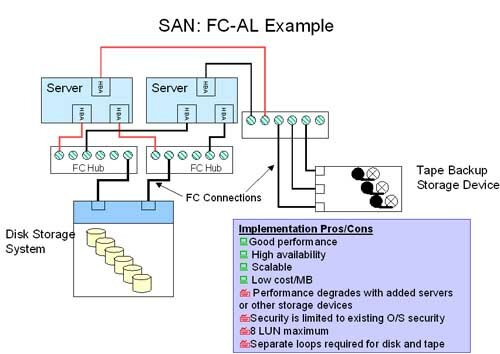

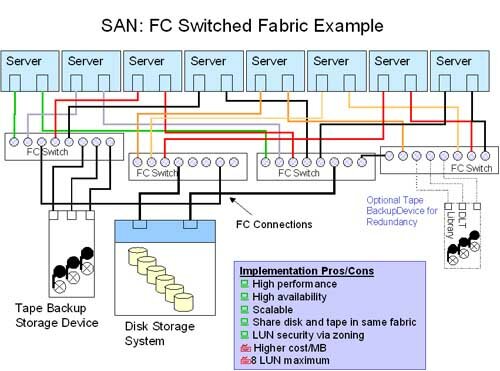

Those customers who need to share storage resources among many different servers would benefit from a SAN, using a high-speed network deployed exclusively for transmitting data to and from storage devices. Sharing storage resources in this manner improves manageability, reduces IT workload and improves access to and availability of data. SANs are new and in their infancy. So are SAN standards, particularly in the area of connectivity. Today, SANs are designed and deployed using high-speed fibre channel (FC) connections between devices. These can be referred to as FC-SANs. FC-SANs can provide data at speeds of up to 100MB/sec and support multiple protocols. Protocols such as SCSI and TCP-IP can be transmitted via the same fibre channel network. Most storage high-end devices in use today utilize the SCSI interface for communications. This makes fibre channel well suited for networking storage devices to servers. Since most individual SCSI devices transmit data between 2 and 20 MB/sec, several devices can communicate simultaneously, at full speed, in the same fibre channel network. As with any network, it takes more than just wires to connect devices in a SAN. Host adapters, hubs and switches are required in FC SANs, just like in Ethernet LANs. It also takes software and other elements to build a fully functional SAN environment. Fibre channel can be integrated between storage devices and servers in three major ways. The first and most straightforward is "point to point." A fibre channel connection is made directly between the server and the storage device. In the following illustration, the FC60 disk system and DLT library are each connected directly to the server. The FC60 is a dual channel device for redundancy and thus requires two connections, each requiring a host adapter. Similarly, there is a direct connection to the DLT library requiring a separate host adapter. This kind of implementation provides for very high performance and high data availability via redundant data paths to the FC60. It is limited in terms of expandability since other servers cannot be added without disconnecting the various components and rebooting the server.  Another configuration for a FC-SAN is what is called arbitrated loop or FC-AL. In this configuration, hubs are used to allow a storage device to be shared with multiple servers.  In this configuration, three hubs are used to connect the disk system and tape backup device to the server via a host bus adapter (HBA). Two hubs are used for the disk system connections for redundancy. Should one hub fail, the other will provide access to the data stored in the disk system. Each group of connections between a single hub and the storage devices and servers is referred to as a "loop." One limitation of the FC-AL implementation is that disk and tape subsystems cannot reside on the same loop. This is because in the FC-AL implementation, a loop initialization process (LIP) can be generated at any point in time by any device on the loop. If a tape drive and disk drive share the same loop and the tape drive fails, a LIP is generated and data being transmitted to the disk drive can be lost, without the system knowing it. Obviously, this is not acceptable, so in practice, all devices in a FC-AL implementation should be on separate loops. Another issue that exists in the FC-AL environment is that the bandwidth to and from a hub is shared, just like in the Ethernet world. For example, if four ports in a hub are used, and all are active at once, the effective bandwidth per port is 33MB/sec. The port to the host provides 100MB/sec, but each of the 3 other ports shares this bandwidth. So the effective transfer rate is 100/3 or 33MB/sec. This means that the more devices that are connected to a FC-AL topology, the lower the net effective transfer rate. The third and final way in which FC-SANs can be configured is called FC switched fabric. In the switched environment, hubs and switches are used to connect devices. The advantage of switches is that they can be configured to provide full bandwidth between any two ports. They don't suffer from the shared bandwidth limitations of hubs. The figure below shows a FC-Switched implementation.  In this configuration, eight servers share access to the FC60 and tape library. Again, multiple sets of switches are used to connect the servers to the disk array. Each server has two independent data paths to the FC60. The switches are cascaded in pairs. The tape library is directly connected to one switch, which gives access to each server through the switch pair. A second library can be added for redundancy to ensure data backup operations can be completed, even in the event of a switch failure. In this switched environment, software is used to direct the connections between switch ports. Each port pair can operate at 100% bandwidth, independent of any other port pair on the switch. This type of configuration provides maximum performance and scalability, but is also the most expensive, due to the complexity and cost of the switch devices. In addition to the hardware elements, software must be deployed that enables the customer to manage the SAN environment. This includes monitoring activity and events, as well as configuring the SAN components, to allow for streamlined communications between devices. One critical role that the software can play in a SAN fabric is to dedicate a specific FC switch for use by certain server and storage combinations. This is referred to as zoning. By setting up specific zones in a switch, performance can be tailored, providing an added layer of security since it limits access to specific storage devices.

SAN – The Challenge

As we've noted, SANs are in their infancy, and there are challenges that must be addressed before you can comfortably implement a true SAN solution. Interoperability. Several of the major governing bodies are still developing standards for switch protocols, networking compatibility and other elements. Also, each major vendor has to test all the components that are required to build a SAN. This is not a trivial task, and as such, vendors are typically certifying only their own brand of storage products for use in the SAN. Vendor A products won't necessarily work with Vendor B products. Price of entry. Because the various elements to building a SAN are new, they are expensive. Economies of scale have not been reached. Deploying a SAN can be an expensive, long-term investment that you must evaluate carefully.

The Future – NAS and SAN

Access to information any time anywhere will be a key driver in the future as teachers, students and parents want more data at their fingertips, regardless of where they are. Both NAS and SAN can play a role in making this type of data access a reality. What might we encounter on the road to the future?

Now you have the basic on two types of network storage. In part two we'll tell you the specifics of our network storage needs and what solution worked best for us. We'll also discuss deployment strategies and tips for a smooth transition to a new storage environment.

Glossary of Terms

1. LAN — local area network; a network, typically Ethernet based, that allows servers, clients and storage devices to communicate with one another. 2. SAN — storage area network; a dedicated, high-speed network implementation allowing multiple servers to share access to storage devices. 3. NAS — network attached storage; refers to storage devices or appliances that can be shared among computers when attached to a LAN. 4. FC — fibre channel; a high-speed interface that is used to connect servers and storage devices together. This interface is capable of processing multiple communication protocols at one time. 5. HBA — host bus adapter; a device added to a server to allow communications to network components and storage devices. 6. Hub — networking device that allows multiple devices to communicate with one another by sharing available network bandwidth. 7. Switch — a networking device that allows multiple devices to communicate with one another by dedicating available bandwidth between devices. 8. FC-Tape — a communication standard that will allow for disk and tape devices to communicate directly with each other over a fibre channel SAN. |

CEDPA